Shut DEV down… at night?

Thinking about shutting down your non-production environments at night, but wondering about the benefits?

Before we started shutting down at night, we were spending a small fortune on hosting environments that simply weren’t used during nights and weekends. Plus we didn’t have a good recovery strategy plan, if our infrastructure failed - so we decided to combine the two, and solve with one.

I’ll talk about what it means, the benefits and how to get started, in your own adventure of shutting down non-prod at night.

What do I mean, by nightly shutdowns? #

By a nightly shutdowns, I actually mean terminating all of your non-production cloud servers at a specified time (e.g. 9pm) and then starting new instances again in the morning (7am). You can also leave these environments “off” over the weekend too, by simply not starting the instances on the Saturday and Sunday mornings.

Are you crazy? That’s an insane amount of work per night! #

Who’s got time to manually turn everything off as we leave, to turn them all back on again as we arrive in the morning. Also something could go wrong - then we’re left without a development or test environment and it would set the teams back by several days!

Having nightly shutdowns as a goal will help you and your team achieve a few things that I believe are fundamentals to successfully running in a cloud environment.

Let’s dissect the quote above, “who’s got time”: nobody should be doing this manually, it should be completely automated. A machine tears it down, and a machine brings it back up. Every day. Exactly the same. This level of automation will enable you quickly and reliably roll out changes to the underlying infrastructure, and know that these changes will be rolled out automatically in the morning.

“Something could go wrong”, we hope not, but wouldn’t you want to know that your deployment/automation scripts were broken in your development/testing environments, rather than in production during a disaster recovery scenario? The reality running in a cloud environment is that all instances are at risk of being removed at any time by the cloud provider - or crash due to an underlying hardware failure. It’s important to design for this level of fault-tolerance.

Benefits of nightly shutdowns #

There are three main benefits:

- Cost saving

- Never having to patch instances

- Testing fault tolerance

Cost Saving #

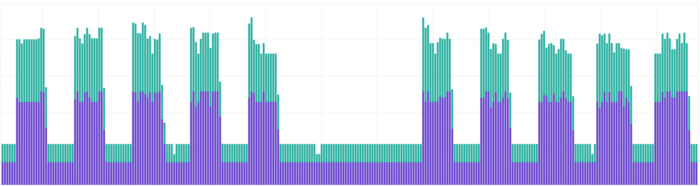

This is the easiest to quantify, so here’s a graph of instance costs broken down per hour. You can clearly see the weekdays, and the weekend gaps where we keep our two environments offline.

There are roughly 730 hours per month, but only 160 working hours (based on an 8hr day, over 20 days). If you allow for a bit of flex in the working times (e.g. 7am - 9pm), it’s 280 hours. That’s 40% of the total monthly hours (and therefore potentially 40% of the cost!).

Never having to patch instances #

One of my philosophies in life is never to patch instances, so we don’t. We terminate and get new instances every day on non-production (and roll weekly on production). This means we also get the latest image that’s available (ready with the latest updates and security fixes).

Patching an in-place running instance can be risky and time consuming, if you’re updating the kernel - some times this will require a full restart. If restarting anyway why not get a brand new, fresh instance?

As we also run stateless services, and all the logs are streamed off each server to a centralised service, we don’t need to worry about log rotation or disks filling up. We can terminate disks at the same time as our instances, and get a new one.

Testing fault tolerance #

Ever heard of Chaos Kong? He’s part of the Simian Army, and is the tool that kills an entire region… whilst we’re not completely simulating that with a nightly shutdown, we are going a significant way to testing all our startup scripts and plans.

Everyday we get to check and test (automatically) that all of our services can survive being offline completely for a number of hours, and resume working normally later. They also check that they come back without any human intervention once the servers start up. We can also take measurements to see if we’re slowing down the boot process or speeding it up over time.

I can now sleep at night, knowing that if we need to invoke our disaster recovery procedures, all our startup scripts will work as expected - as the’ve been tested in a real environment every day.

Thinking of getting started? #

- Check in with your team, and anyone using these environments - find out what times work for them to be offline! This whole idea unfortunately doesn’t work very well if you have an off-shore team on the other side of the world.

- Check your instances are completely automated, and need no human to touch them to go from not existing to completely working.

- This is a huge topic in itself - it depends on your application and environment, we use AWS EC2 to host our applications, and heavily rely on User Data scripts on our auto scaling launch configurations, in order to setup the application to the correct state on boot.

- The auto scaling group will maintain a number of instances we ask it to run, and this is where we put the schedule in some cases, in other cases we run it from a CircleCI schedule to trigger an AWS CLI to do the same job - this is so we can easily run the “scale-up” workflow to bring the environment online.

- Manually terminate instances to test it out first, they should come back automatically if using an auto-scaling group. This can give you confidence to try nightly.

- One way to automate this is to use an auto-scaling group (or similar). Set a schedule to scale up/down - this will automatically terminate and start new instances at your specified times.

Let me know how you get on! @florx

Credits #

- “Off” Photo by Aleksandar Cvetanovic on Unsplash

- “Demolition” Photo by Science in HD on Unsplash

- “Fresh” Photo by Pietro De Grandi on Unsplash

- “Game On” Photo by 甜心之枪 Sweetgun on Unsplash