Today is International Backup Awareness Day

Of course, that’s the joke. Everyday is International Backup Awareness Day. You should go and check your backups now. It’s OK - I can wait… I should probably go and check mine too.

You have backups right? As I mentioned in a previous post Zero to development of an idea, I have a little dev box that I love to use for my little side projects. Now there’s no point in setting up a separate backup strategy for each of these as they are all so small it would be infeasible to maintain.

Instead I go for a multi-faceted approach.

- Use Git repositories to store the code in BitBucket. That way they’re on my development workstation and on a cloud host (and one would hope they backup).

- Use Amazon Web Services - Simple Storage Service to save my entire

/var/www/htmldirectory to a bucket for safe keeping.

Here’s how I make it work:

s3cmd sync -r /var/www/html/ s3://bucketname/backupwww/

I love the s3cmd it just works, and it also can configure itself using s3cmd --configure. Easy. This is on a cron job to run every hour, and it will update only the files necessary to make the bucket up to date. Saves on bandwidth too!

So my code is in 4 places. I think that’s enough redundancy for some side projects usually used for tinkering.

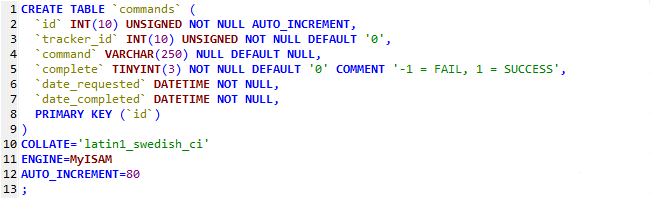

Now how about that MySQL database? I have written a script that does a mysql dump of all of the databases on a daily basis then uploads the raw SQL files to S3. I have the lifecycle rules setup to keep 30 days worth before it starts pruning old SQL files.

I wouldn’t like to have to rewrite all of my SQL scripts if I lost them all…

I have one improvement that I would like to make, which is to compress the SQL into a tarball then upload.

Lastly server configuration, ideally I would be running some fancy puppet setup with a master node to control the software on the instance then I could drop and recreate it at a moments notice. However this is simply just overkill for what I need.

I did a complete refresh (read: delete everything, and rebuild from scratch) of the server a few weeks ago, and in doing so I created a Git repository that has all of the server configuration I need in there. Things like httpd.conf or vsftpd.conf are checked in and version controlled. If I make a change on the server, I make the same change locally and check it in.

My final improvement on my list is to create a script to automatically copy these files into the repo on the server every day and check in the changes if there are any. That way I get notified of any changes and they’re also backed up.

Lastly you should check your backup solution every so often, not just that it’s backing up as you expect but also that you can restore. This is just as important! Create a temporary folder and see if you can extract your archives as expected, create a local database and see if your SQL files will correctly run on the server to create the database from scratch. Otherwise your backups will be all for nothing.

I’ve just put a recurring reminder in my calendar to do it every few months, Google Calendar has a new ‘Reminder’ feature for this which is really handy.

Are your backups running? Or are they now this has reminded you? Let me know - @florx