AWS Managed Prometheus and Grafana

In the past few weeks, AWS has released into preview two exciting new services. At our disposal, we now have Amazon Managed Service for Prometheus (AMP), a Prometheus compatible managed monitoring solution for storing and querying metrics at scale. Additionally, we now also have Amazon Managed Service for Grafana (AMG), which as you would expect, is a fully managed data visualisation service with logically isolated servers. AWS handles all the provisioning, scaling and security automatically.

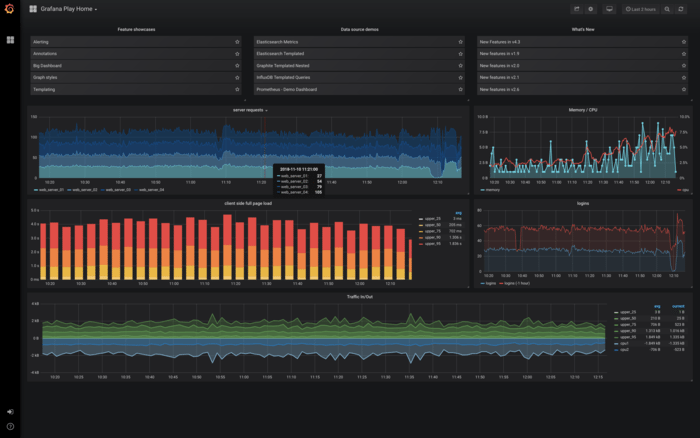

AMG (Grafana) is currently in limited preview, so I got myself access to it and have had a play to see how both of these new managed services work together for some of the standard ways I see customers using AWS.

It’s worth considering that both of these products are in preview and therefore have limited availability to certain regions. For AMG (Grafana) this is only us-east-1 and eu-west-1, for AMP (Prometheus) this is slightly wider but still limited currently to us-east-1, us-east-2, us-west-2, eu-west-1, eu-central-1.

My first impressions are that these services will relieve a significant pain point for many teams and customers currently managing Prometheus and Grafana stacks. Running Prometheus can be simple initially, but doing so in a highly available and scalable way can be tricky, especially given it is a stateful application, storing metrics on disk. There are several ways to scale storage, using remote reads and writes with something like Thanos. Ensuring Prometheus is highly available is especially important when also using it for incident management or using Prometheus Alertmanager to bring attention to failing or degraded system components.

If you expect AMP to be a fully-fledged Prometheus server that you can configure targets to scrape various endpoints, you’ll be disappointed. As AMP is a “Prometheus-compatible” managed service, it provides no such configuration options. The only way to use this service is to push metrics. AWS has, however, offered several solutions to this problem.

If you are already running Prometheus yourself somewhere, you can immediately enable remote writes to switch over to the managed service. Using remote writes will increase memory usage by at least 25%, so if you are close to hitting memory limits, you may wish to scale up before implementing this option.

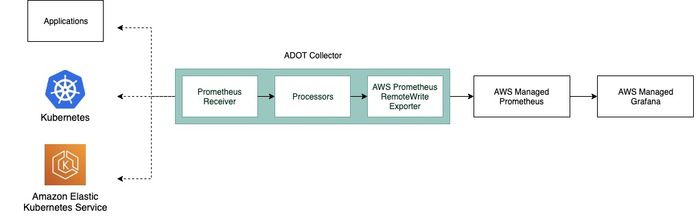

Otherwise, if you are starting from the beginning, or want to scrape metrics without changing your existing Prometheus setup, the other option is to run the AWS Distro for OpenTelemetry (ADOT) Collector. This collector will scrape using receivers and forward metrics to AMP using exporters. There are configuration examples when using AWS EKS that quickly get you started, including an example starter project to make sure everything is working correctly.

A note on security: AMG (Grafana) requires the use of AWS SSO. Whilst AWS introduced SSO a few years ago; I have yet to see wide adoption with customers. A lack of broad adoption may prove a slight sticking point, as AWS SSO has a couple of requirements. You can read the full list in the AWS documentation, but in summary, you need to set up AWS Organisations with all organisation features enabled, not just consolidated billing.

One of the lovely setup features about AMG (Grafana) is that you can elect to integrate a handful of services and allow access via IAM. This setup approach enables fast integration to Amazon CloudWatch, Amazon Elasticsearch Service, AMP (Prometheus), Amazon Timestream, AWS X-Ray, AWS IoT SiteWise and Amazon SNS. We can integrate all of these by checking a few boxes when setting up the workspace. Also, to keep your metrics networking entirely within the VPC, AMP (Prometheus) supports VPC interface endpoints, preventing metrics from being transmitted over the internet.

AMP’s pricing seems hugely competitive, especially when compared to the soft-costs of managing and supporting Prometheus in an unmanaged way. AWS has provided an example on the AMP pricing page. Unfortunately the same cannot be said for AMG (Grafana), at $9 per active editor per month the costs will quickly ramp up. An engineering team of twenty engineers able to edit dashboards with ten viewers will mean $230 per month (assuming all are active users). Given Grafana is trivial to set up, and can be done as Infrastructure as Code quickly, it may be more cost-effective to stick with a self-managed service.

All in all, it is effortless to use AMG (Grafana) and query metrics once available in AMP (Prometheus). Any existing metrics in Amazon CloudWatch, Amazon Timestream plus others will also be immediately available for querying. Integrating AMP is not as straightforward, and I would recommend using the ADOT Collector if starting to monitor a new stack. If you have an existing stack, trying out remote writes with your current Prometheus server is a slick way to begin publishing metrics to a highly available managed service. However watch out for remote write performance issues, and note that you are potentially still vulnerable to a single Prometheus instance failing to scrape. To resolve this, consider running multiple Prometheus servers and utilising AMP deduplication.

In summary, if you’re already using Prometheus, I would recommend investigating AMP for your project immediately. It promises to take away most of the pain points of running a Prometheus server — and seems to deliver on those promises. If you’re already using Grafana, and it is a painful installation, give AMG a go, otherwise, stick with what you’re running right now.

Are you using CloudWatch and aren’t yet using anything like Prometheus or Grafana? Unless you have a specific driver for moving towards using Grafana for presenting metrics or Prometheus for storing CloudWatch metrics, I recommend sticking to using CloudWatch directly.

I want to commend the AWS teams involved with AMP and AMG as they appear as excellent services that will significantly improve operational efficiency. Therefore, teams can spend more time focusing on delivering value to their end-users.

Give these services a try, and let me know what you thought — @florx